The invisible front

10. November 2025

18. August 2025

Disinformation has influenced public opinion since time immemorial. What is new is how quickly and convincingly it goes viral today. With the help of artificial intelligence (AI) and a little practice, almost anyone can now generate authentic-looking images or manipulate audio and video recordings. How does this affect our democracy and security?

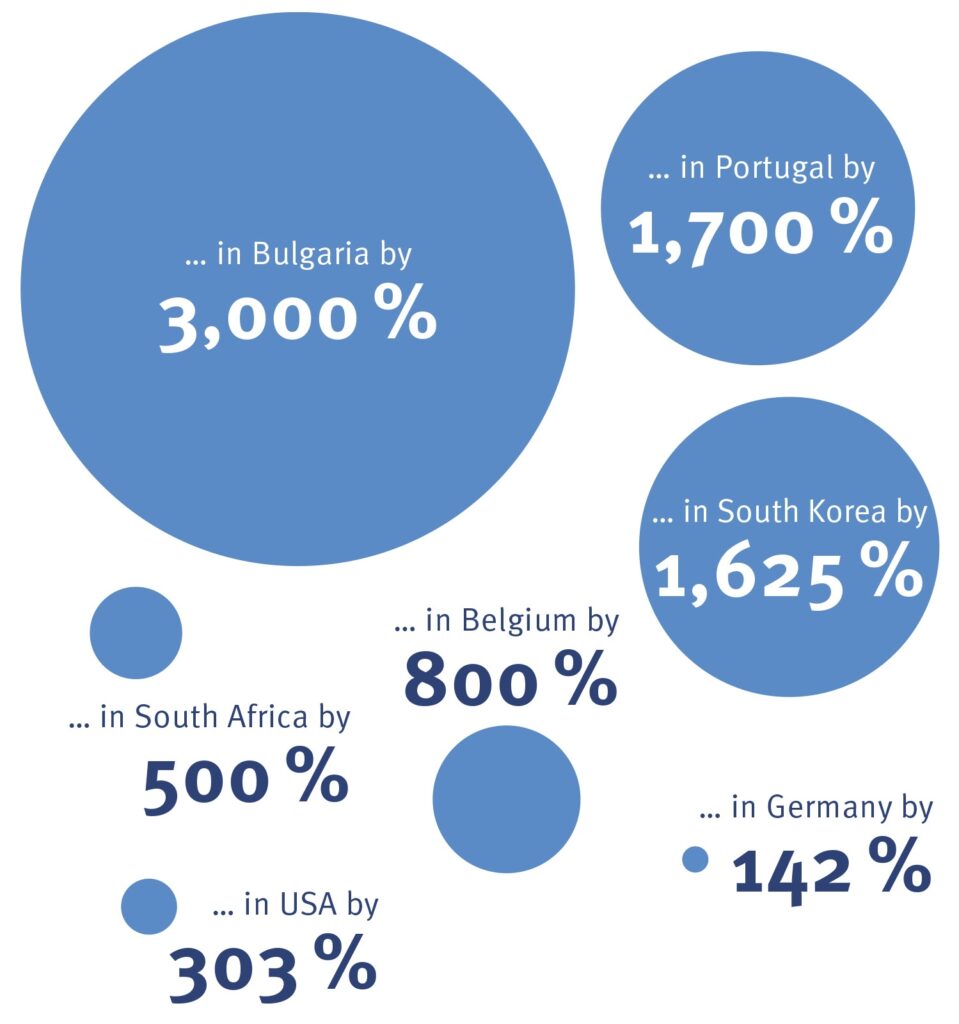

Deepfakes in numbers

Increase in deepfakes in countries with elections in 2024 …

Source: Sumsub Data Reports

ZDF presenter Christian Sievers is allegedly promoting dubious investments, German chancellor Friedrich Merz has issued a statement disparaging democracy, and US pop icon Taylor Swift has been compromised with fake pornographic images. Deepfakes such as these examples are circulating en masse online. These are manipulated image, video, or audio files that are generated with the help of artificial neural networks and methods of deep learning. The tools required for this are freely available. The more data AI receives about the appearance, facial expressions, and gestures of the people concerned, the more authentic and realistic the computer-generated fakes appear.

Long tradition

Omitting context, altering facts, spreading lies: fake news is not a new phenomenon. Its history dates back more than 2,000 years. Since ancient times, disinformation campaigns have been used to discredit political opponents, gain financial advantages, unsettle the public, or stir up mistrust, fear, and anger. However, with the possibilities offered by social media and AI technologies today, disinformation is reaching a new dimension.

Identity fraud is rising rapidly

According to the Identity Fraud Report published by the Entrust Cybersecurity Institute in early 2025, a deepfake attack took place every five minutes last year. The amount of fake digital documents and identities has increased by 244 percent compared to 2023. At the same time, scams are becoming increasingly sophisticated. A particularly sensational case occurred at luxury car manufacturer Ferrari. Using a perfectly imitated, AI-generated voice of CEO Benedetto Vigna, cybercriminals attempted to initiate a large money transfer. The contacted manager became suspicious. Other companies, such as a subsidiary of payment provider PayPal and the US network component manufacturer Ubiquity Networks, were less fortunate.

Propaganda weapon of the future

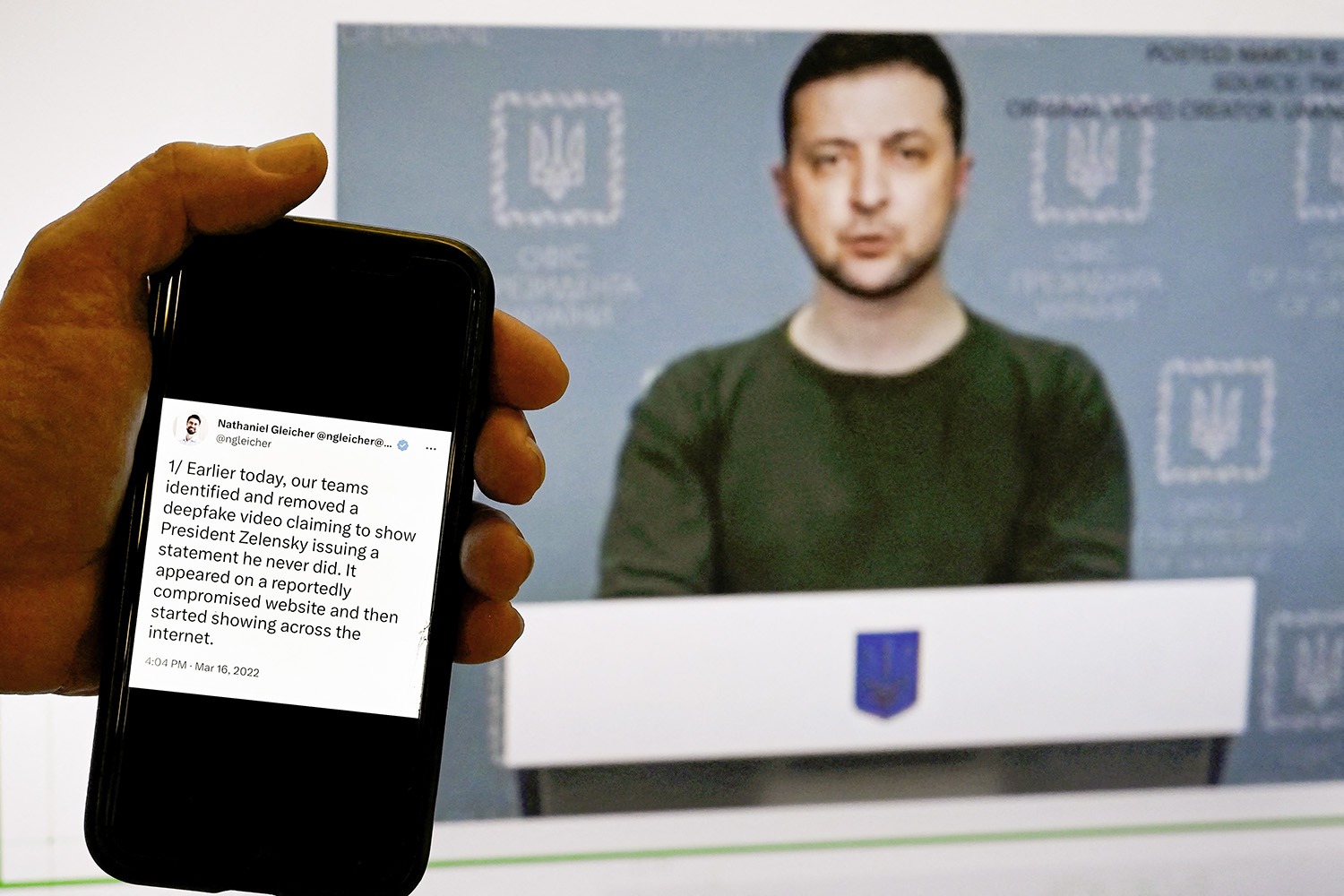

However, deepfakes are not only a growing threat to businesses. With the increasing prevalence of fake audio and video recordings, there is also a growing risk of political influence being exerted in elections, public discourse, and state conflicts. In March 2022, an alleged surrender video of Ukrainian President Volodymyr Zelensky appeared. While such first-generation deepfakes were relatively easy to expose, today’s high-quality fakes are much more difficult to detect. Which eyewitness reports from the war zones are real and which are fake?

Loss of trust

Deepfakes cast doubt on the veracity of news, fuel conflicts, and demoralise people. They undermine trust in democratic institutions and contribute to polarisation and social division. The more anger an item triggers, the greater the chance that people will react to it – and thus create outreach on social media. Another effect is the so-called lie dividend: the loss of trust caused by deepfakes makes it all the easier for autocrats and populist parties to claim that real videos are fake.

Targeting deepfakes precisely

To minimise the risks posed by deepfakes, it is essential to have solid digital-literacy skills. Suspicious audiovisual media should be critically examined and verified using other sources. At the same time, AI-based detection systems and robust security protocols can help.

Click here to receive push notifications. By giving your consent, you will receive constantly information about new articles on the Dimensions website. This notification service can be canceled at any time in the browser settings or settings of your mobile device. Your consent also expressly extends to the transfer of data to third countries. Further information can be found in our data protection information under section 5.